In today’s online meetings, especially when collaborating across Japan and other regions, the biggest friction point is often language. Even when everyone is discussing the same topic, real understanding can be delayed or lost entirely.

To solve this quickly and efficiently, without waiting for full AI microservice deployment, we recommend activating live translation directly inside BigBlueButton. This method gives you instant bilingual subtitles during the meeting:

a) English speaker → Japanese subtitles

b) Japanese speaker → English subtitles

And this happens in real time, as the person is speaking – no typing required.

Why this works well as Phase 1

✓ Fast – can be enabled within the existing BigBlueButton system

✓ No need for apps or external screens

✓ Suitable for workshops, team discussions, technical briefings

✓ Allows both Japanese and English participants to speak comfortably in their native language

✓ Ideal while preparing for the larger Whisper-based AI automation phase

It’s like having a bilingual AI interpreter sitting in the meeting, except very quietly and with perfect memory.

How it feels for meeting participants

You join a BigBlueButton session as usual.

You select your preferred spoken language (English or Japanese).

If you want to see subtitles, tap the “CC” (closed captions) icon.

While you or someone else speaks:

If they speak English → subtitles appear in Japanese

If they speak Japanese → subtitles appear in English

Conversations become smoother. No need to “switch languages” or wait for someone to translate. Everyone speaks naturally.

Example

A Japanese professor explains AI farming technology in Japanese.

At the same moment, English-speaking participants see the explanation subtitled in English.

An overseas engineer responds in English.

Japanese members immediately see Japanese subtitles.

This keeps flow, respect, and clarity intact – without interrupting each other.

Typical latency

Around 0.5–1 second behind the speaker.

Close to real-time conversation. Good enough for discussions and Q&A.

Where Phase 1 is especially useful

AI strategy sessions with Japan partners

Technical walkthroughs with cross-border teams

University–industry presentations

AI & IoT project collaborations (Kyushu, Fukuoka, Singapore, etc.)

Pre-meeting briefings before more formal interpreted sessions

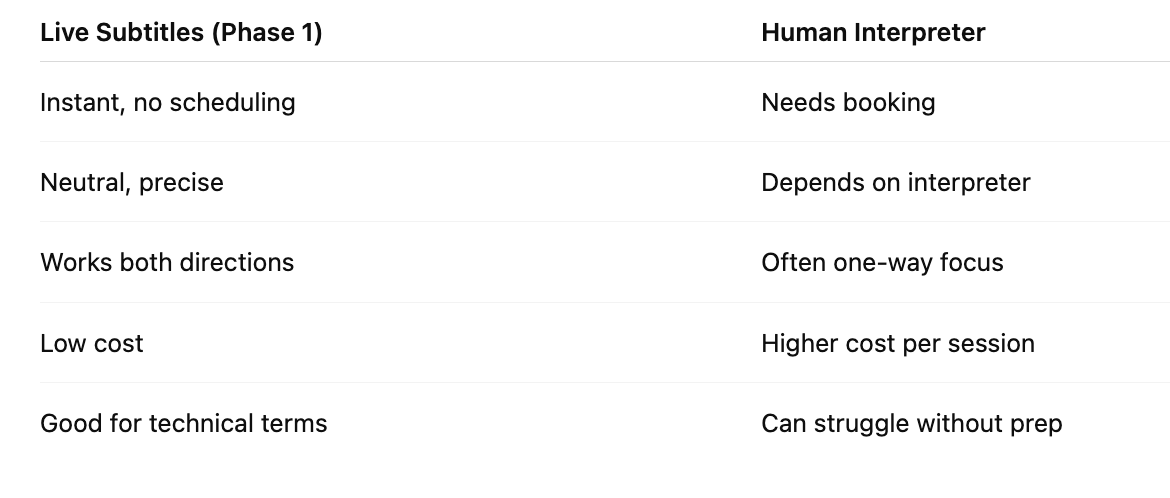

Key benefits vs human interpretation

Things to keep in mind

Requires good internet connection for speakers

Works best with headset or clear microphone

This is cloud-supported (audio is processed securely online)

For fully offline or privacy-sensitive sessions – that’s Phase 2 with self-hosting technology

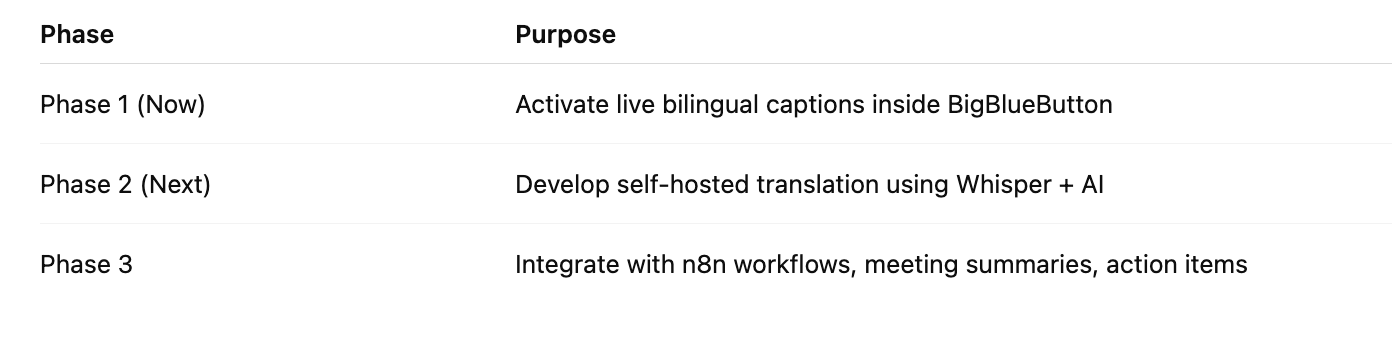

Roadmap – Keeping things simple

Start effortless → move toward powerful.

Final Thought

Phase 1 is perfect when we need to connect teams quickly without waiting for long-term system development. Just enabling this feature can significantly lift meeting productivity and reduce misunderstandings.

It allows participants to remain confident, present ideas clearly, and engage more deeply – regardless of whether they speak English or Japanese.

If we’re collaborating with academia, Fukuoka AI startups, or cross-border farm automation projects, this is the most immediate solution to make every voice heard and understood.